Can machines pick up sarcasm?

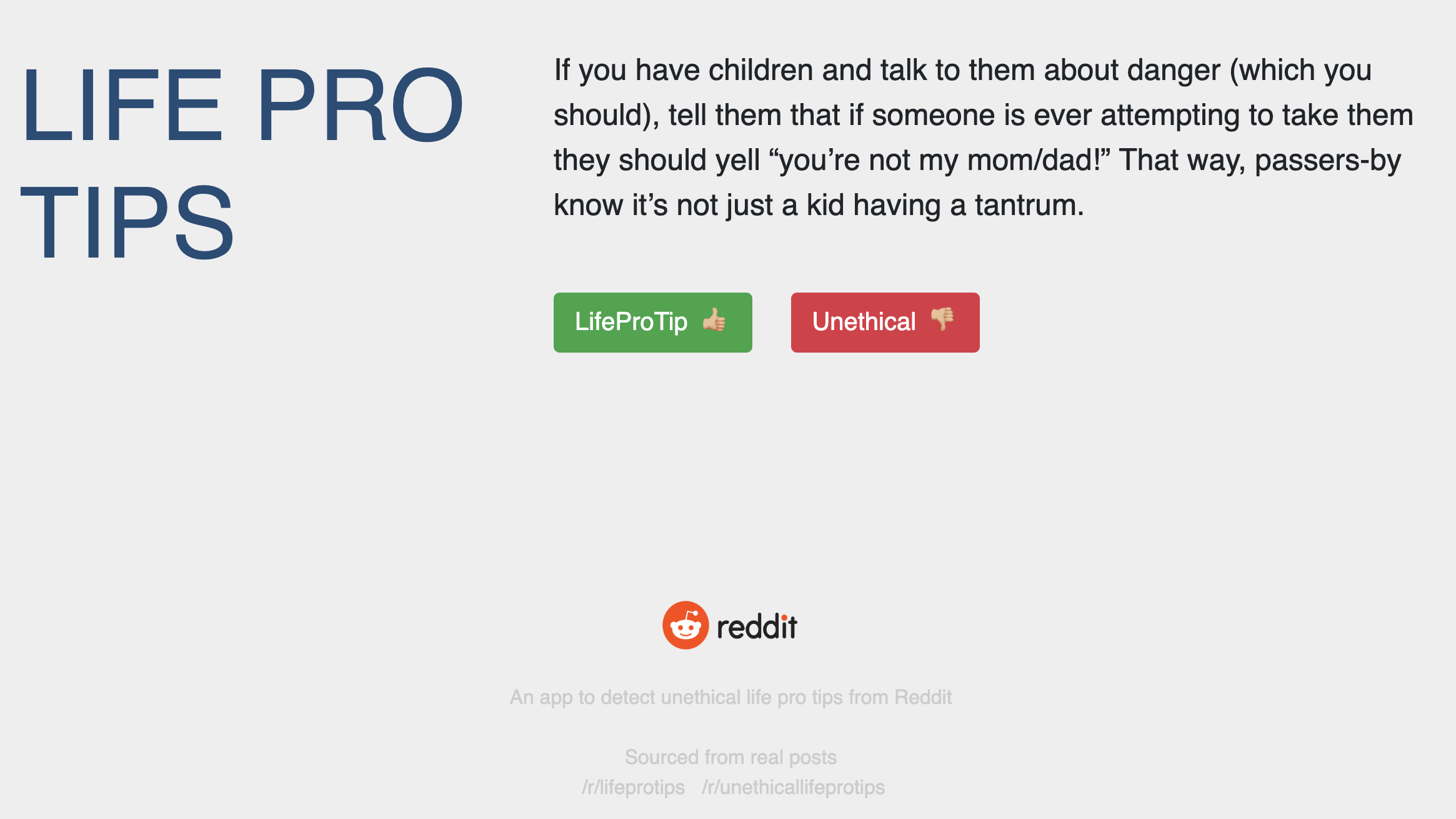

What's the difference between a life pro tip, and one that is a bit more questionable? This can be sometimes a subtle difference, or a moral grey area to distinguish, even for humans.

Life Pro Tip:

A concise and specific tip that improves life for you and those around you in a specific and significant way.

Example: "If you want to learn a new language, figure out the 100 most frequently used words and start with them. Those words make up about 50% of everyday speech, and should be a very solid basis."

An Unethical Life Pro Tip is a tip that improves your life in a meaningful way, perhaps at the expense of others and/or with questionable legality. Due to their nature, do not actually follow any of these tips–they're just for fun.

Example: "Save business cards of people you don't like. If you ever hit a parked car accidentally, just write "sorry" on the back and leave it on the windshield."

Let's collect posts (web scrap) from 2 subreddits, and create a machine learning model using Natural Langauge Processing (NLP) to classify which subreddit a particular post belongs too. Can my model pick up on sarcasm, internet 'trolling', or tongue-in-cheek semantic of sentences? Probably not, but let's try. I hope you have as much fun playing with this, as I did making it.

If you're feeling lucky, visit my app for a Life Pro Tip!

Reddit API

Fortunately, Reddit provides a public JSON end point, so we can easily consume that format, and manipulate it a Pandas DataFrame. Simply add .json at the end of the URL.

If you plan to run your own get requests, keep in mind that Reddit has a limit of 25 posts / request. In conjunction with for loop, write a time.sleep() function in Python (or something equivalent) to avoid a 429 Too Many Requests error.

Data dictionary

We are interested in the following features:

| Target variable, y | subreddit (str) |

| Design matrix, X |

title (str) score (int) num_comments (int) author (int) name (int) |

Pre-processing data

First, I have to pre-process the data, and use natural language processing packages to tokenize strings to individual words. We will be using Python's Natural Language Toolkit (nltk) package.

Follow along if you want to create your own classifier, otherwise, skip to the results. All code is available on GitHub. Refer to reddit_garry.py for scraping.

import pandas as pd

import numpy as np

import re

import nltk

from nltk.tokenize import RegexpTokenizer

from nltk.corpus import stopwords

from sklearn.model_selection import train_test_split

from sklearn.feature_extraction.text import CountVectorizer, TfidfVectorizer

from nltk.stem import WordNetLemmatizer

from sklearn.preprocessing import LabelEncoder, StandardScaler, LabelBinarizer

from sklearn_pandas import DataFrameMapper

from sklearn.naive_bayes import MultinomialNB

from sklearn.model_selection import GridSearchCV

from sklearn.linear_model import LogisticRegression

from sklearn import svm

from sklearn.exceptions import DataConversionWarning

import warnings

warnings.filterwarnings(action='ignore', category=DataConversionWarning)

warnings.filterwarnings(action='ignore', category=FutureWarning)

np.set_printoptions(suppress=True)

pd.set_option('display.max_colwidth', -1)

from IPython.display import HTML

# encoding utf-8 for special characters

raw_lpt = pd.read_csv("./data/lpt.csv", encoding='utf-8')

raw_ulpt = pd.read_csv("./data/ulpt.csv", encoding='utf-8')

Merge, train, test split data.

Notice how there is "ULPT" or "LPT" in the title, which is clearly target leakage. To prevent target leakage in the title, I will use regular expressions (Regex) to match permutations of LPT, lpt, ULPT, ulpt and remove.

df = pd.merge(raw_lpt, raw_ulpt, how='outer')

HTML(df.sample(2).to_html(classes="table table-responsive table-striped table-bordered"))

| author | name | num_comments | score | subreddit | title | |

|---|---|---|---|---|---|---|

| 131 | cmplctdsmplcty | t3_8t9pd6 | 1582 | 43772 | LifeProTips | LPT: If a friend is buying you lunch and you are wondering what price or how much is okay, ask him what he recommends. |

| 641 | j0be | t3_38d5tt | 982 | 17099 | LifeProTips | LPT: Testing a battery life |

y = df.subreddit

X = df.drop(["subreddit",'name'],axis=1) # drop name, it's a unique identifier, not predictive

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=42)

def post_to_words(raw_post):

'''Returns a list of words ready for classification, by tokenizing,

removing punctuation, setting to lower case and removing stop words.'''

tokenizer = RegexpTokenizer(r'[a-z]+')

words = tokenizer.tokenize(raw_post.lower())

meaningful_words = [w for w in words if not w in set(stopwords.words('english'))]

return(" ".join(meaningful_words))

X_train.loc[:,"title_clean"] = X_train["title"].apply(lambda row : re.sub(r"[uU]*[lL][pP][tT]\s*:*", '', row)).apply(lambda row: post_to_words(row))

# for humans

X_test.loc[:,"title_read"] = X_test["title"].apply(lambda row : re.sub(r"[uU]*[lL][pP][tT]\s*:*", '', row))

# for model

X_test.loc[:,"title_clean"] = X_test["title_read"].apply(lambda row: post_to_words(row))

Modeling

CountVectorizer

Let's start simple. CountVectorizer is a bag of words model processes text by ignoring structure of a sentences and merely assesses the count of specific words, or word combinations.

def my_vectorizer(vectorizer,X_train,X_test,y_train,y_test,stop=None):

'''Takes a vectorizer, fits the model, learns the vocabulary,

transforms data and returns the transformed matrices'''

# transform text

vect = vectorizer(stop_words=stop)

train_data_features = vect.fit_transform(X_train.title_clean)

test_data_features = vect.transform(X_test.title_clean)

le = LabelEncoder()

target_train = le.fit_transform(y_train)

target_test = le.transform(y_test)

# transform non text

mapper = DataFrameMapper([

("author", LabelBinarizer()),

(["num_comments"], StandardScaler()),

(["score"], StandardScaler())], df_out=True)

Z_train = mapper.fit_transform(X_train)

Z_test = mapper.transform(X_test)

print(f' Training set learned {train_data_features.shape[1]} distinct vocabulary')

print(f' Remember: 0 -> {le.classes_[0]}, 1 -> {le.classes_[1]}')

# Baseline model

print(f' Baseline model that guessed all LPT -> {round(1-sum(target_test)/len(target_test),2)} accurate')

# Combine both df columns together

a = pd.DataFrame(train_data_features.todense())

b = Z_train

c = pd.DataFrame(test_data_features.todense())

d = Z_test

# reset indices in order to merge

a = a.reset_index().drop("index",axis=1)

b = b.reset_index().drop("index",axis=1)

c = c.reset_index().drop("index",axis=1)

d = d.reset_index().drop("index",axis=1)

Z_train = pd.merge(a,b, left_index=True, right_index=True)

Z_test = pd.merge(c,d, left_index=True, right_index=True)

return (Z_train, Z_test, target_train, target_test)

Classification

With my data ready in array format, I can now apply binary classifiers. I'll try:

- Logistic Regression

- Naive Bayes Multinomial

- Support Vector Machine

my_tuple = my_vectorizer(CountVectorizer,X_train,X_test,y_train,y_test,stop='english')

Z_train = my_tuple[0]

Z_test = my_tuple[1]

target_train = my_tuple[2]

target_test = my_tuple[3]

Training set learned 5104 distinct vocabulary

Remember: 0 -> LifeProTips, 1 -> UnethicalLifeProTips

Baseline model that guessed all LPT -> 0.5 accurate

def results(model):

'''Return a sample of 5 wrong predictions'''

model.fit(Z_train, target_train)

print(f' Training accuracy: {model.score(Z_train, target_train)}')

print(f' Test accuracy: {model.score(Z_test, target_test)}')

predictions = model.predict(Z_test)

predictions = np.where(predictions==0,"LifeProTips","UnethicalLifeProTips")

proba_lpt = model.predict_proba(Z_test)[:,0]

final = pd.DataFrame(list(zip(predictions, y_test, proba_lpt, X_test.title_read, X_test.num_comments, X_test.score)), columns=['prediction', 'label', 'proba_lpt','title', "num_comments", "score"])

wrong = final[final.prediction!=final.label]

return HTML(wrong.sample(2).to_html(classes="table table-responsive table-striped table-bordered"))

results(LogisticRegression())

Training accuracy: 0.9993021632937893

Test accuracy: 0.895397489539749

| prediction | label | proba_lpt | title | num_comments | score | |

|---|---|---|---|---|---|---|

| 400 | LifeProTips | UnethicalLifeProTips | 0.939449 | As a parent of a baby, smell their diaper. If you DON?T smell poop, say, ?Woah, somebody has a poopy diaper. ? Then take them to the other room and pretend to change them. Then the next time they poop tell your spouse, ?It?s your turn. I changed them last time.? | 733 | 38948 |

| 367 | UnethicalLifeProTips | LifeProTips | 0.456397 | People want someone to tell them what to do in emergency situations. For example while performing CPR on someone don't say "Someone call an ambulance" instead talk to one person and ask him/her to call an ambulance directly. | 706 | 20378 |

log_model = LogisticRegression()

log_model.fit(Z_train, target_train);

These two were incorrectly classified, because the num_comments might have thrown it off. These are not your typical posts, but by moderators.

Let's look at the largest coefficients that correspond to num_comments (col 6452), score (col 6453), column 576, 4945 & 3125, and peak into the words.

pd.options.display.float_format = '{:.2f}'.format

my_coef = pd.DataFrame(list(zip(Z_train.columns,abs(log_model.coef_[0]))),columns=["x","coef"]).sort_values(by="coef",ascending=False)

my_coef.head()

| x | coef | |

|---|---|---|

| 6354 | num_comments | 4.33 |

| 6355 | score | 1.83 |

| 576 | 576 | 1.11 |

| 4945 | 4945 | 1.05 |

| 3125 | 3125 | 1.03 |

my_coef = pd.DataFrame(list(zip(Z_train.columns,log_model.coef_[0])),columns=["x","coef"])

large_coef = my_coef[(my_coef["x"]=="num_comments") | (my_coef["x"]=="score") | (my_coef["x"]==576) | (my_coef["x"]==4945) | (my_coef["x"]==3125)]

HTML(large_coef.to_html(classes="table table-responsive table-striped table-bordered"))

| x | coef | |

|---|---|---|

| 576 | 576 | 1.11 |

| 3125 | 3125 | 1.03 |

| 4945 | 4945 | 1.05 |

| 6354 | num_comments | -4.33 |

| 6355 | score | -1.83 |

cvect = CountVectorizer(stop_words='english')

train_data_features = cvect.fit_transform(X_train.title_clean)

print(f' {cvect.get_feature_names()[576]}: {round(np.exp(1.11),2)}x')

print(f' {cvect.get_feature_names()[4945]}: {round(np.exp(1.03),2)}x')

print(f' {cvect.get_feature_names()[3215]}: {round(np.exp(1.05),2)}x')

business: 3.03x

want: 2.8x

pitched: 2.86x

f'If the number of comments increases by 1, the likelihood of being an Unethical Life Pro Tip is {round(np.exp(-4.33),2)}x more likely.'

'If the number of comments increases by 1, the likelihood of being an Unethical Life Pro Tip is 0.01x more likely.'

f'If the score (upvotes - downvotes) increases by 1, the likelihood of being an Unethical Life Pro Tip is {round(np.exp(-1.83),2)}x more likely.'

'If the score (upvotes - downvotes) increases by 1, the likelihood of being an Unethical Life Pro Tip is 0.16x more likely.'

This is really performant off the bat with 99.9% training accuracy and 89.5% test accuracy. Yes, there is overfitting but it's picking up signal, in the text.

There's many more false positives than false negatives. One could argue, false positives are not as bad as false negatives since you don't want to heed the advice of a bad tip, but if you miss a life pro tip, it's not as damaging. If we wanted to be more strict, we could tweak the threshold such that only predictions > 75% would be classified as UnethicalLifeProTip.

"Give the same perfume to your wife and your girlfriend. It could save your ass one day." 🙅🏻♂️ - Not a Life Pro Tip but was predicted a Pro Tip

-

The more 'popular' i.e. more comments and score, the great likelihood that it is unethical. Controversial posts tend to gain more popularity.

-

In this training set, if your document includes the word 'business', then the likelihood of being unethical is far more likely by 3x. There's probably a lot of unethical comments around taking advantage of businesses!

Term Frequency Inverse Document Frequency (TF-IDF)

Compared to CountVectorizer, TF-IDF vectorizer tells us which words are most discriminating between documents. Words that occur often in one document but don't occur in many documents are important and contain a great deal of discriminating power. Note, TF-IDF figures are between [0,1]. The score is based on how often a word is compared in your document (spam) and other documents.

my_tuple = my_vectorizer(TfidfVectorizer,X_train,X_test,y_train,y_test,stop='english')

Z_train = my_tuple[0]

Z_test = my_tuple[1]

target_train = my_tuple[2]

target_test = my_tuple[3]

Training set learned 5104 distinct vocabulary

Remember: 0 -> LifeProTips, 1 -> UnethicalLifeProTips

Baseline model that guessed all LPT -> 0.5 accurate

results(LogisticRegression())

Training accuracy: 0.9567341242149338

Test accuracy: 0.8535564853556485

| prediction | label | proba_lpt | title | num_comments | score | |

|---|---|---|---|---|---|---|

| 223 | LifeProTips | UnethicalLifeProTips | 0.65 | If you?re driving next to a cop with drugs in your car and are trying to act normal, pick your nose. Your body language shows you aren?t concerned with anyone around you. The last thing you?d ever do if you were paranoid about a cop next to you is pick you nose. | 654 | 24883 |

| 371 | UnethicalLifeProTips | LifeProTips | 0.13 | Found a useful Youtube video but no time to watch all of it? Click on the three circles underneath the video and hit 'Open transcript'. | 260 | 10747 |

Test accuracy decreases to 85.4% which is lower than CountVectorizer. There are probably not as many discriminating words. Common words are helpful in distinguishing between the two classes. Words of high frequency that are predictive of one of the classes.

It's not entire clear which words are the most influential, some words might indicate sarcasm. Overall, it's impressive that a logistic regression model is so powerful already, let's try a few more algorithms.

Naive Bayes Classifier

The multinomial Naive Bayes classifier is appropriate for classification with discrete features (e.g., word counts for text classification), as the columns of X are all integer counts.

Note, this classifier accepts only positive values so I have run the abs function on my scaled features. While I have the option to add a prior, I have opted to have Sklearn estimate from training data directly. I don't have a strong opinion if a particular post is in one subreddit over the other.

model = MultinomialNB()

Z_train = my_tuple[0]

Z_test = my_tuple[1]

target_train = my_tuple[2]

target_test = my_tuple[3]

Z_train.num_comments = abs(Z_train.num_comments)

Z_train.score = abs(Z_train.score)

model.fit(Z_train, target_train)

print(f' Training accuracy: {model.score(Z_train, target_train)}')

print(f' Test accuracy: {model.score(Z_test, target_test)}')

predictions = model.predict(Z_test)

predictions = np.where(predictions==0,"LifeProTips","UnethicalLifeProTips")

final = pd.DataFrame(list(zip(predictions, y_test, X_test.title_read, X_test.num_comments, X_test.score)), columns=['prediction', 'label', 'title', "num_comments", "score"])

wrong = final[final.prediction!=final.label]

HTML(wrong.sample(2).to_html(classes="table table-responsive table-striped table-bordered"))

Training accuracy: 0.9951151430565248

Test accuracy: 0.805439330543933

| prediction | label | title | num_comments | score | |

|---|---|---|---|---|---|

| 186 | UnethicalLifeProTips | LifeProTips | If you want people to leave you alone while you are traveling, just wear a surgical mask and people will give you plenty of space. | 616 | 25459 |

| 63 | UnethicalLifeProTips | LifeProTips | Shipping boxes for the Holiday Season | 662 | 11521 |

from sklearn.metrics import confusion_matrix

predictions = model.predict(my_tuple[1])

print(confusion_matrix(my_tuple[3], predictions))

tn, fp, fn, tp = confusion_matrix(my_tuple[3], predictions).ravel()

print("True Negatives: %s" % tn)

print("False Positives: %s" % fp)

print("False Negatives: %s" % fn)

print("True Positives: %s" % tp)

[[167 74]

[ 19 218]]

True Negatives: 167

False Positives: 74

False Negatives: 19

True Positives: 218

While Naive Bayes also has a high training accuracy, it is severely overfitting. In this case, there are more false negatives than false positives. It tended predict that certain posts were unethical life pro tips, when in fact they are!

Support Vector Machines

- Exceptional perfomance

- Effective in high-dimensional data

- Low risk of overfitting, but a black box method

my_tuple = my_vectorizer(CountVectorizer,X_train,X_test,y_train,y_test,stop='english')

Z_train = my_tuple[0]

Z_test = my_tuple[1]

target_train = my_tuple[2]

target_test = my_tuple[3]

results(svm.SVC(probability=True))

Training set learned 5104 distinct vocabulary

Remember: 0 -> LifeProTips, 1 -> UnethicalLifeProTips

Baseline model that guessed all LPT -> 0.5 accurate

Training accuracy: 0.6189811584089323

Test accuracy: 0.5962343096234309

| prediction | label | proba_lpt | title | num_comments | score | |

|---|---|---|---|---|---|---|

| 388 | UnethicalLifeProTips | LifeProTips | 0.36 | If you accidentally clicked "Don't Save" when closing a MS Word document, you can manually recover it by going to go to File>Info>Manage Versions>Recover Unsaved Documents | 315 | 23711 |

| 321 | UnethicalLifeProTips | LifeProTips | 0.33 | after a family member gets married, keep the list of things that they registered for but didn’t get and use it for birthday and Christmas presents. It will save you time wondering what they want especially if they’re difficult to shop for. | 365 | 21039 |

params = {'C': [1,3],'gamma': ["scale"]}

grid_search = GridSearchCV(svm.SVC(), param_grid=params, cv=5)

grid_search.fit(Z_train, target_train)

print(grid_search.best_score_)

print(grid_search.best_params_)

print(grid_search.score(Z_test,target_test))

0.9204466154919749

{'C': 3, 'gamma': 'scale'}

0.9037656903765691

results(svm.SVC(3,gamma="scale",probability=True))

Training accuracy: 1.0

Test accuracy: 0.9037656903765691

| prediction | label | proba_lpt | title | num_comments | score | |

|---|---|---|---|---|---|---|

| 419 | LifeProTips | UnethicalLifeProTips | 0.70 | If you are faking injury make sure you fully commit to the role, be hurt and struggle with simple tasks even when there's no doubt that you aren't being watched. It will help you sell it when people are around and security cameras/wondering eyes can make or break your case. | 469 | 18511 |

| 454 | LifeProTips | UnethicalLifeProTips | 0.97 | As a parent of a baby, smell their diaper. If you DON?T smell poop, say, ?Woah, somebody has a poopy diaper. ? Then take them to the other room and pretend to change them. Then the next time they poop tell your spouse, ?It?s your turn. I changed them last time.? | 733 | 38948 |

Out of the box, SVC is not performant. I have to tune hyperparameters to improve the accuracy. Recall, if C is large, we do not regularize much (larger budget that the margin can be violated), leading to a more perfect classifier of our training data. Of course, there will be a trade off in overfitting and greater error due to higher variance. A smaller gamma helps with lower bias, by trading off with higher variance. Gamma = "scale", which uses n_features * X.var() tends to work well.

| Classification Model | Training Accuracy % | Test Accuracy % |

| Baseline | 0.5 | 0.5 |

| Logistic | 0.999 | 0.895 |

| Naive Bayes | 0.995 | 0.805 |

| Support Vector Machines | 0.999 | 0.903 |

Given these results, my selected production model will be the logistic regression model with CountVectorizer as the vectorizer. The Logistic Model is nearly the most performant, but also provides a high level of interpret-ability compared to SVM.

In conclusion:

-

The more 'popular' i.e. more comments and score, the great likelihood that it is unethical. Controversial posts tend to gain more popularity.

-

In this training set, if your document includes the word 'business', then the likelihood of being unethical is far more likely by 3x. There's probably a lot of unethical comments around taking advantage of businesses!

Wrap up

I was able to create an app using Natural Language Processing to classify which subreddit a particular post belongs to.

While this was a fun use case of NLP, this analysis is widely applicable other areas, such as politics in classifying fake news vs. real news, or for eCommerce, with sentiment analysis of user reviews (i.e. polarity classification - positive, negative of neutral). Further, many virtual assistants (Amazon Alexa, Google Assistant) use NLP to understand human questions and provide the appropriate responses.

In these scenarios, there would be far greater consequences, if the prediction was a false-positive or false-negative, so fine tuning the model to adjust for these thresholds is critical.

As a next step, I hope to investigate other NLP open source packages such as Spacy!